3-D transistor will indeed help Intel beat back ARM, iHS iSuppli says

xbit Labs

ARM Not Afraid of Intel's 22nm/Tri-Gate Process Technology - Company.

Wired Revolution

Intel’s 3D tri-gate Transistor Redesign Brings Huge Efficiency Gains

+ there were a lot more...

These news articles got me thinking...

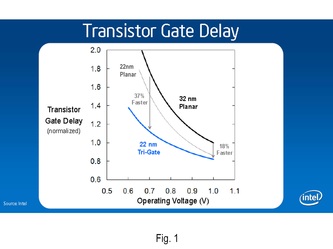

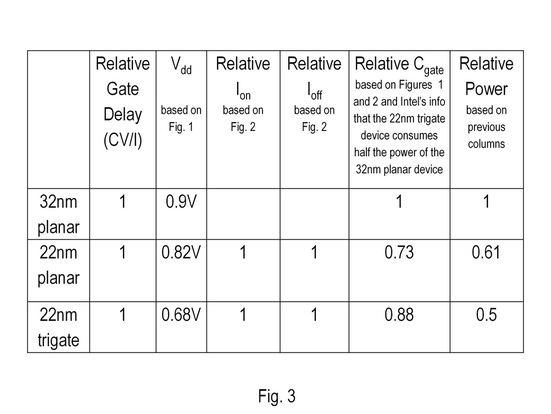

- Intel says their 22nm trigate device gave them 50% lower power or 37% higher performance vs. their 32nm planar device. But how much power did the 22nm trigate device save vs. the 22nm planar device? If ARM has a planar device at 22nm, then that is the important comparison!

- How much chip power can Intel save by using the 22nm trigate device in a microprocessor (instead of a 22nm planar device)? Interconnects and clocks consume a significant portion of the total power in microprocessors today... so the question is: how much does transistor innovation impact the overall chip?

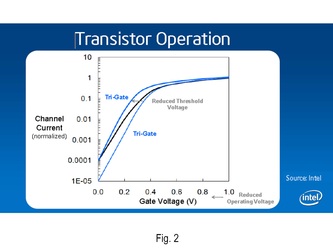

- With the tri-gate MOSFET, you can get the same MOSFET performance (CV/I delay) at lower operating voltages than a planar MOSFET. Trigate @ 22nm gives ~140mV of supply voltage reduction vs. planar @ 22nm.

- Transistor dynamic power: Trigate @ 22nm is 50% lower than planar @ 32nm. But when compared to planar @ 22nm, the advantage reduces to 19%.

- Transistor drive resistance: This quantity is approximately proportional to the ratio of supply voltage and drive current... it improves by 18% by using the trigate @ 22nm vs. the planar @ 22nm. This is an important metric, since it allows one to drive interconnect capacitance faster.

While Intel's press announcement had detailed transistor data, they did not have much information showing chip power savings possible with the trigate device. This question is important since, like I said above, clock power and interconnect power form a big percentage of chip power today...

I did some calculations to answer this question.

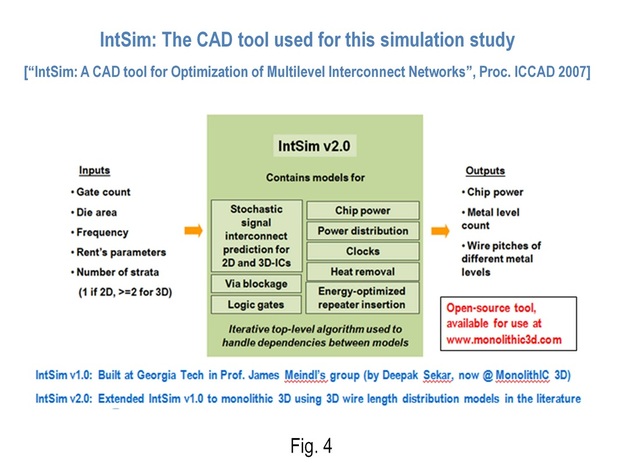

I used an open-source chip simulator called IntSim for this purpose. IntSim has models that describe various aspects of a modern-day chip, and its results show a good fit to actual data from past Intel microprocessors. Please see Fig. 4 for more details, and also check out the original paper about IntSim at the 2007 Intl. Conference on Computer-Aided Design (ICCAD). A GUI-based version of IntSim is available at this link (for free use).

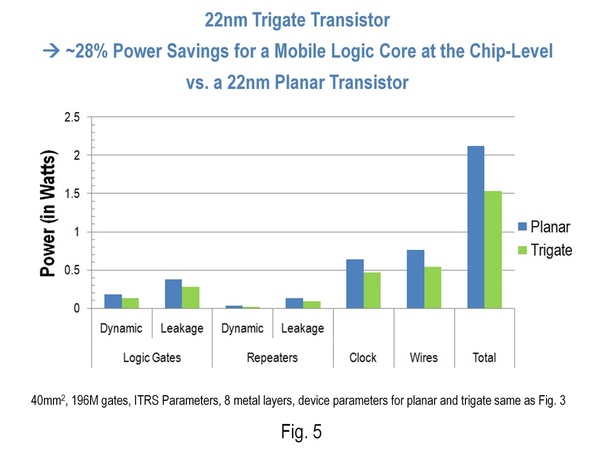

- The 140mV supply voltage reduction enabled by the tri-gate device is very useful, since it saves clock power and interconnect power too (these quantities are proportional to the square of the supply voltage). You'll notice a 28% reduction in clock power and interconnect power in Fig. 5.

- Transistor drive resistance (Vdd/Ion) reduction provided by the tri-gate device allows it to drive interconnect capacitive loads better. Hence we get smaller size gates for the same performance target. This, coupled with the transistor power benefits shown in Fig. 3, gives a 28% power reduction for logic gates in the design.

- Repeater power goes down by 32% due to the better transistors. As many of you know, the delay of a long interconnect with repeaters is proportional to Sqrt(RC time constant of wires * RC time constant of transistors). So, better transistors lead to repeated wires with improved performance and power.

Will Trigate play an important role in the Intel-ARM tussle?

As an engineer, I like the fact that Intel is taking the Finfet into manufacturing - it is a fantastic technical achievement. The Finfet has been shown to work at channel lengths as low as 10nm, and gives neat I-V characteristics. I know some of the original developers of the Finfet @ UC Berkeley as well, and it is always nice to see your colleagues get their innovations into the marketplace.

But will the Finfet play an important role in the Intel-ARM tussle?

I don't think so. Let me explain why...

There are several variables involved in the Intel-ARM equation:

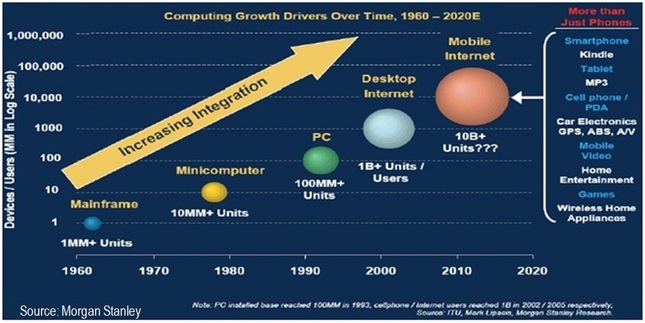

- ARM has momentum in the mobile space. I've learned, in my past, that displacing a technology or product entrenched in the marketplace is very very difficult.

- Intel's x86 architecture is CISC, while ARM's architecture is RISC. RISC architectures have historically given higher performance per watt than CISC in the mobile space. Can x86 bridge this gap?

- Intel chips are one process generation ahead of ARM chips. This is a valuable advantage.

- ARM chips are typically made in low-cost East-Asian fabs. For example, fabs in Taiwan are known to be 20-50% cheaper than fabs in the US, at the same technology node. This is due to additional government concessions, lower building costs and lower labor costs. All these years, having fabs in the US did not impact Intel, since its only competition was AMD (which had fabs in Europe, which are equally/more costly). But while competing with ARM, low-cost fabs could be important.

- There are many more suppliers of ARM chips than x86 ones. Customers like more competition, it keeps prices down.

- Who will be the first to "productize" other breakthrough technologies, such as 3D stacking of DRAM atop logic, and monolithic 3D? Intel or ARM? Some of these technologies could provide more benefits to mobile chip power, performance and die size than trigate.

- Intel has a lot more resources than players in the ARM world - this could be useful, especially in this day and age when designs cost $100M, fabs cost $7B and process R&D costs $2B.

- Will Microsoft execute on its goal to get Windows 8 on ARM? How soon and how well will it execute? This is a crucial business issue for laptops and netbooks, but may not impact the smart-phone world much.

What do you think will happen in the years to come? Please feel free to share your thoughts and opinions...

- Post by Deepak Sekar

RSS Feed

RSS Feed