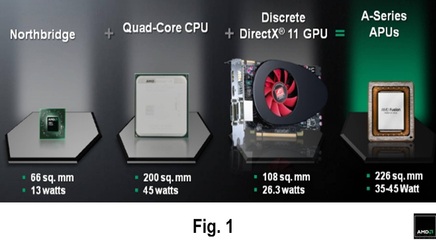

Moore's Law is amazing. Fig. 1 shows what I mean. A few years back, we had separate chips for the CPU, the GPU and the Northbridge of a PC. Today, Intel and AMD integrate all these components onto a single low-power chip! The customer pays for one chip instead of three. Doubling the number of transistors every two years is powerful.

This integration has important implications for the industry, and in particular for discrete GPU vendors such as nVIDIA.

Why? Because it could eat away a big chunk of the discrete GPU market in the long term.

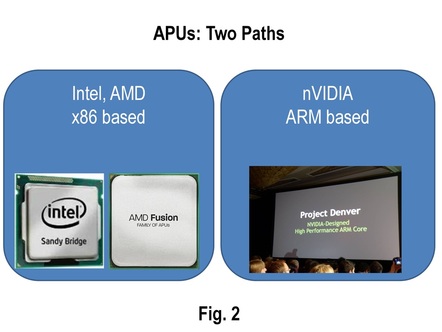

Guess what nVIDIA is doing to stay alive? Its started to build a CPU-GPU combo chip too! And since it doesn't have a x86 license, its integrating an ARM based CPU with its GPU. Luckily for nVIDIA, Microsoft is building versions of Windows that work on ARM. See the YouTube video below, which is a Microsoft demo at the Consumer Electronics Show 2011 (Steve Ballmer, the Microsoft CEO, makes a guest appearance). You'll notice ARM chips running Windows and various applications such as Word and PowerPoint... Windows on ARM may not be far-fetched after all.

We therefore have two approaches to CPU-GPU combos, better known as APUs (Accelerated Processing Units). AMD and Intel are going the x86 route, while nVIDIA is trying to produce an ARM-based APU (Fig. 2). All these years, PCs were built solely with x86 chips, and now we're bringing ARM-suppliers such as nVIDIA to the table. This competition has the potential to change our industry, I believe. x86 vendors have gross margins (55-60%) significantly higher than the rest of our industry (30-40%), largely because they have been the "exclusive" provider of chips for Windows-based PCs. Loss of exclusivity and increased competition have the potential to significantly impact x86 vendors' gross margins and growth prospects (in the long term). Whether this potential translates to market change needs to be seen. Intel and AMD have staved off many challengers over the years!

Why does it make sense to integrate CPUs and GPUs on the same die?

According to Sam Naffziger,

- Future workloads are expected to be media-rich. He quoted this interesting statistic: Our ability to process language is limited to about 150 words per minute, whereas the human brain can process visual information at 400-2000 times that rate. Due to this, we are increasingly using visual experiences to communicate with each other. Examples of these are YouTube, Facebook Photos and use of voice interfaces instead of keyboards. Data mining and visualization are also important workloads for the future. All these workloads benefit tremendously from GPU-type computation.

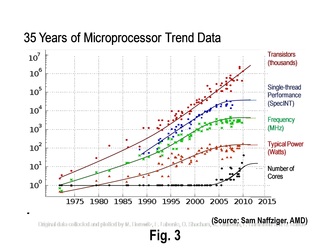

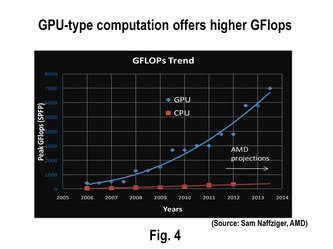

- Single-thread performance is plateauing out. See Fig. 3. At the same time, GPUs offer a lot more Flops per second than CPUs (Fig. 4). Due to their parallel nature, GPUs benefit from higher transistor density, a quantity Moore's law continues to offer, so they may be scalable. If additional transistors are available due to Moore's law, it makes sense to use them to integrate a GPU on the die.

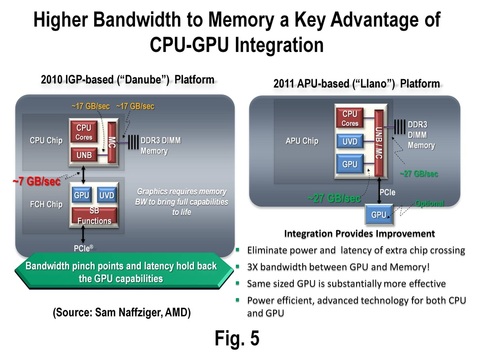

- Fig. 5 shows that the GPU gets a 3x higher bandwidth to DRAM by being integrated on the same die as the CPU. This is a key advantage of CPU-GPU integration, and gives better graphics performance.

According to Sam Naffziger, a number of interesting issues and opportunities come up once you start integrating the GPU on the same die as the CPU:

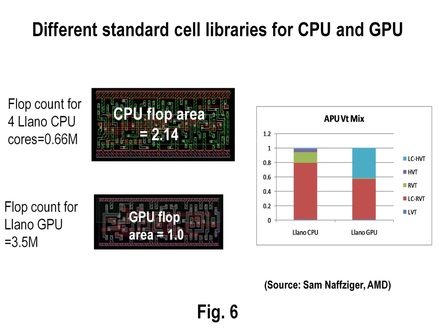

- Separate standard cell libraries for the CPU and the GPU: The GPU typically requires density optimized libraries, while the CPU requires performance optimized ones. Sam showed how a flip-flop optimized for density with a GPU library would have 50% lower area than a flip-flop optimized for performance with a CPU library (Fig. 6). Not just that, GPU libraries have higher Vt values and longer channel lengths than CPU ones since they don't require such high performance (Fig. 6).

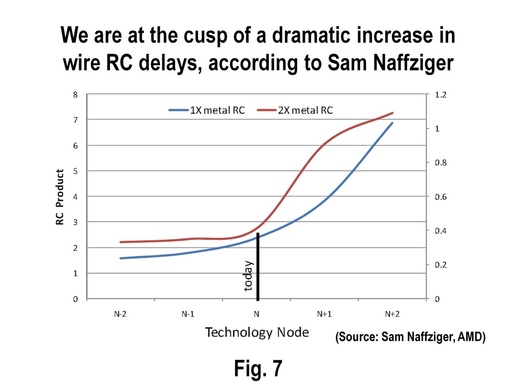

- Wire RC challenges: Sam talked about how GPUs need smaller pitch wiring since they are optimized for density, while CPUs need larger pitch wiring since they are optimized for performance. Integrating the CPU and GPU on the same die causes trade-offs in wiring pitch selection. More importantly, Sam talked about how wire RC will be a huge difficulty starting from the next generation (see Fig. 7). In his words, "we are at the cusp of a dramatic increase in wire RC delays due to a number of factors such as small geometries resulting in increased edge effect scattering, the lack of dielectric improvement and the overhead from copper cladding layers. This trend will seriously reduce

both the density and performance benefits of process scaling, so some sort of revolutionary improvement in interconnect is required." I was quite happy to hear this assessment since it is great for my company's monolithic 3D technology. Monolithic 3D is one of the few solutions available for the on-chip interconnect problem :-)

- Supply voltage reduction: For power-density limited designs such as APUs, Sam talked about how supply voltage reduction was crucial to scaling. The issue with lower supply voltage is that variability is a challenge. Sam mentioned that fully depleted devices such as Finfets and fully-depleted SOI allow lower supply voltage and help with APU scaling.

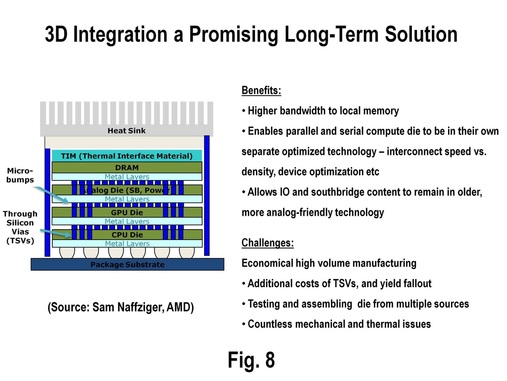

- 3D Integration a long-term solution: In the long term, Sam feels 3D integration could be a promising solution. He discussed how one could stack DRAM, the GPU, the CPU and IOs/analog on separate layers of the stack. This helps tackle memory bandwidth issues which are key for APUs. Furthermore, GPUs and CPUs need their own optimized interconnect stacks and device libraries, and so 3D stacking GPUs and CPUs might be useful, especially for mobile applications that are less constrained by heat. However, Sam feels there are many challenges with manufacturing 3D stacks right now. Please see Fig. 8 for more details.

If you'd like to see more details of Sam Naffziger's talk, please check out his slides. They are available here. Many thanks to Sam for allowing me to share his slides.

So long until the next blog-post, folks!

RSS Feed

RSS Feed